Portal:Mathematics

The Mathematics Portal

Mathematics is the study of representing and reasoning about abstract objects (such as numbers, points, spaces, sets, structures, and games). Mathematics is used throughout the world as an essential tool in many fields, including natural science, engineering, medicine, and the social sciences. Applied mathematics, the branch of mathematics concerned with application of mathematical knowledge to other fields, inspires and makes use of new mathematical discoveries and sometimes leads to the development of entirely new mathematical disciplines, such as statistics and game theory. Mathematicians also engage in pure mathematics, or mathematics for its own sake, without having any application in mind. There is no clear line separating pure and applied mathematics, and practical applications for what began as pure mathematics are often discovered. (Full article...)

Featured articles –

Selected image –

Good articles –

Did you know (auto-generated) –

- ... that multiple mathematics competitions have made use of Sophie Germain's identity?

- ... that in the aftermath of the American Civil War, the only Black-led organization providing teachers to formerly enslaved people was the African Civilization Society?

- ... that in 1940 Xu Ruiyun became the first Chinese woman to receive a PhD in mathematics?

- ... that a folded paper lantern shows that certain mathematical definitions of surface area are incorrect?

- ... that although the problem of squaring the circle with compass and straightedge goes back to Greek mathematics, it was not proven impossible until 1882?

- ... that subgroup distortion theory, introduced by Misha Gromov in 1993, can help encode text?

- ... that mathematician Daniel Larsen was the youngest contributor to the New York Times crossword puzzle?

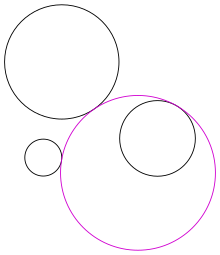

- ... that circle packings in the form of a Doyle spiral were used to model plant growth long before their mathematical investigation by Doyle?

More did you know –

- ... that the Hadwiger conjecture implies that the external surface of any three-dimensional convex body can be illuminated by only eight light sources, but the best proven bound is that 16 lights are sufficient?

- ... that an equitable coloring of a graph, in which the numbers of vertices of each color are as nearly equal as possible, may require far more colors than a graph coloring without this constraint?

- ... that no matter how biased a coin one uses, flipping a coin to determine whether each edge is present or absent in a countably infinite graph will always produce the same graph, the Rado graph?

- ...that it is possible to stack identical dominoes off the edge of a table to create an arbitrarily large overhang?

- ...that in Floyd's algorithm for cycle detection, the tortoise and hare move at very different speeds, but always finish at the same spot?

- ...that in graph theory, a pseudoforest can contain trees and pseudotrees, but cannot contain any butterflies, diamonds, handcuffs, or bicycles?

- ...that it is not possible to configure two mutually inscribed quadrilaterals in the Euclidean plane, but the Möbius–Kantor graph describes a solution in the complex projective plane?

Selected article –

|

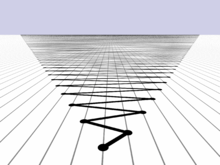

| In this shear transformation of the Mona Lisa, the central vertical axis (red vector) is unchanged, but the diagonal vector (blue) has changed direction. Hence the red vector is said to be an eigenvector of this particular transformation and the blue vector is not. Image credit: User:Voyajer |

In mathematics, an eigenvector of a transformation is a vector, different from the zero vector, which that transformation simply multiplies by a constant factor, called the eigenvalue of that vector. Often, a transformation is completely described by its eigenvalues and eigenvectors. The eigenspace for a factor is the set of eigenvectors with that factor as eigenvalue, together with the zero vector.

In the specific case of linear algebra, the eigenvalue problem is this: given an n by n matrix A, what nonzero vectors x in exist, such that Ax is a scalar multiple of x?

The scalar multiple is denoted by the Greek letter λ and is called an eigenvalue of the matrix A, while x is called the eigenvector of A corresponding to λ. These concepts play a major role in several branches of both pure and applied mathematics — appearing prominently in linear algebra, functional analysis, and to a lesser extent in nonlinear situations.

It is common to prefix any natural name for the vector with eigen instead of saying eigenvector. For example, eigenfunction if the eigenvector is a function, eigenmode if the eigenvector is a harmonic mode, eigenstate if the eigenvector is a quantum state, and so on. Similarly for the eigenvalue, e.g. eigenfrequency if the eigenvalue is (or determines) a frequency. (Full article...)

| View all selected articles |

Subcategories

Algebra | Arithmetic | Analysis | Complex analysis | Applied mathematics | Calculus | Category theory | Chaos theory | Combinatorics | Dynamical systems | Fractals | Game theory | Geometry | Algebraic geometry | Graph theory | Group theory | Linear algebra | Mathematical logic | Model theory | Multi-dimensional geometry | Number theory | Numerical analysis | Optimization | Order theory | Probability and statistics | Set theory | Statistics | Topology | Algebraic topology | Trigonometry | Linear programming

Mathematics | History of mathematics | Mathematicians | Awards | Education | Literature | Notation | Organizations | Theorems | Proofs | Unsolved problems

Topics in mathematics

| General | Foundations | Number theory | Discrete mathematics |

|---|---|---|---|

| |||

| Algebra | Analysis | Geometry and topology | Applied mathematics |

Index of mathematics articles

| ARTICLE INDEX: | |

| MATHEMATICIANS: |

Related portals

WikiProjects

![]() The Mathematics WikiProject is the center for mathematics-related editing on Wikipedia. Join the discussion on the project's talk page.

The Mathematics WikiProject is the center for mathematics-related editing on Wikipedia. Join the discussion on the project's talk page.

In other Wikimedia projects

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus

![Image 1 Noether c. 1900–1910 Amalie Emmy Noether (US: /ˈnʌtər/, UK: /ˈnɜːtə/; German: [ˈnøːtɐ]; 23 March 1882 – 14 April 1935) was a German mathematician who made many important contributions to abstract algebra. She proved Noether's first and second theorems, which are fundamental in mathematical physics. She was described by Pavel Alexandrov, Albert Einstein, Jean Dieudonné, Hermann Weyl and Norbert Wiener as the most important woman in the history of mathematics. As one of the leading mathematicians of her time, she developed theories of rings, fields, and algebras. In physics, Noether's theorem explains the connection between symmetry and conservation laws. Noether was born to a Jewish family in the Franconian town of Erlangen; her father was the mathematician Max Noether. She originally planned to teach French and English after passing the required examinations but instead studied mathematics at the University of Erlangen, where her father lectured. After completing her doctorate in 1907 under the supervision of Paul Gordan, she worked at the Mathematical Institute of Erlangen without pay for seven years. At the time, women were largely excluded from academic positions. In 1915, she was invited by David Hilbert and Felix Klein to join the mathematics department at the University of Göttingen, a world-renowned center of mathematical research. The philosophical faculty objected, however, and she spent four years lecturing under Hilbert's name. Her habilitation was approved in 1919, allowing her to obtain the rank of Privatdozent. (Full article...)](http://upload.wikimedia.org/wikipedia/en/d/d2/Blank.png)

![{\displaystyle \mathbb {Z} [{\tfrac {1}{2}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bc0cd93b7492cdaf8d7d38f960b7f12d4d370eb1)